AWS 비용 최적화 Part 1: 버즈빌은 어떻게 월 1억 이상의 AWS 비용을 절약할 수 있었을까

버즈빌은 2023년 한 해 동안 월간 약 1.2억, 연 기준으로 14억에 달하는 AWS 비용을 절약하였습니다. 그 경험과 팁을 여러 차례에 걸쳐 공유합니다. AWS 비용 최적화 Part 1: 버즈빌은 어떻게 월 1억 이상의 AWS 비용을 절약할 수 있었을까 (준비중) …

Read Article(Artwork generated by Dalle2 with the following prompt: A cyberpunk Designer looking at multiple screens with a robotic arm with neon lights in a dark, smoky room)

I recently spent some time exploring this wonderful new world of possibilities that are offered by what is generally called AI Art. This article could actually cover the potential of this Tech itself. But this topic is already well covered, and I am a Designer, after all, so I also questioned what this could mean for the Design paradigm. Then how this could be applied to the Ad industry.

Art is always ahead of things. It feeds creative fields as a raw, endless source of inspiration for anyone. Once again, the Art field leads the way, with or without everyone’s consent, through a new way to create artwork based on simple prompts.

If you read more about this topic, you’ll see many articles mentioning the limitations and risks. You’ll read that the Art world is either embracing it or rejecting it completely. You’ll also read that as the visual expression is made accessible to literally anyone, abuse and mal use will be some of the challenges to solve. But let’s leave these concerns for later, and focus on the world of opportunities this opens for Designers.

But first, let’s have a look at what it can do today. MidJourney is one of the major Research labs that provide a creative generator through Discord or via their own WebApp.

When landing on Discord as a newbie, you are redirected to one of the free trial channels that let you test the service for free. What you have to do it to type “/Imagine” followed by whatever you want to see translated into an image. The more details you give, the better, sharper results you’ll get.

/Imagine “Discovered land, full of machines, animals, plants with the look of Jean Jullien’s artworks” will lead to creating this image in seconds:

Now, if you don’t know who is Jean Jullien, he is a French illustrator who does this kind of work:

Considering the unrealistic prompt I entered, the AI did a fairly good job of translating my intention into an image! The AI offers 4 variations for 1 prompt, and from then, you can create variations of any of these 4 variations, and so on. You can also start from an image and request to change or use it in any possible way.

The prompt becomes your tool to draw Art. Your capacity to phrase an idea so that the AI can interpret it visually. The rest is all about variations and refinements!

There are 2 major players in this area, both will let you play around for free for some time. Then both will offer ways to continue at a cost. Both platforms are using different monetization solutions, but we can consider them fairly affordable for what it does. Considering that both are in beta, now is probably a good time to play around!

Seeing the emulsion this creates on the Web, we can guess that other companies will try to develop the AI segment into more various use cases.

The way these AI work is truly captivating. Most systems are made of two components, the generator, and the discriminator. Based on a given prompt, the generator will do its best to generate an accurate and original representation. The discriminator will challenge the outcome over iterations.

Among the AI Art models, the most popular one that gained fame in 2022 is the VQGAN + CLIP model. VQGAN stands for “Vector Quantized Generative Adversarial Network”. CLIP stands for “Contrastive Image-Language Pretraining”. Long story short, the VQGAN model generates images, and the CLIP model judges how well the generated images match the text prompt. For more details about these models and how they interact, you might be interested in reading these articles:

Obviously, these models would need some serious adjustments, but the logic would remain fairly the same. Let’s deep-dive into the wonderful world of assumptions. We know that the current model uses a neural network that connects existing images from a large database and reads through their Alt Text (their textual descriptions). So as long as we can create a UI library that is properly documented, with descriptions on every level of clean, atomic component architecture, the same logic could be applied, and an AI could be trained over such a database.

We also know that most AI Art generators are now interfaced with prompts, we just need to write down what we want to create and the AI produces it through the model described above.

So we can assume that we could interface UI work the same way, simply telling what we want to mockup. Dalle-2 also adds details over parts of an image, making the process more refined. We could use the same technic to alter a part of the UI we want to refine. This AI would work inside an existing UI tool, where all of your settings would remain unchanged (styles, nudge amount, etc.). Technically, the AI could be fed from the Community resources or focused on the shared libraries you are using,.. or a mix of the two.

Considering how fast a mockup can be built nowadays, it could be interesting to measure the lead time between a Designer creating a mockup by manipulating the tool and by typing a prompt. My guess is that once we get used to typing prompts (or speaking), the lead time would be much faster than any UI superstar. But this also brings us one more potential development, that could eventually lead to even more gain of time. So far it seems doable to consider AI as a UI builder. But if the machine could also build up the entire UX flow, then this could mean drastic improvements in terms of efficiency.

The model would need some serious adjustments to create interconnections between screens and create an entire UX flow. But if the AI could be able to crawl a large database of UX flows, we can imagine that even the quality of the outcome could become as good or better than what an experienced Designer could do. Our current tools, Design outcomes are not systematically connecting screens between them. We would need to find the database to train the AI into recognizing UX patterns, but for this, we would first need to… create this database. More and more Figma integrates Prototyping features inside components and recently made these prototyping interactions pervasive, so we can guess that sooner or later, Designers will be able to systemize interaction patterns. We can also guess that such an initiative should probably come from a tool like Figma (or Adobe?? 😬) or through their Plugin API and open community database. All this seems a little far from now, right? But if you told me a year ago that I could create Artwork this easily, I wouldn’t have believed it! So maybe the future of Design using AI isn’t so far from now after all…

In the context of Buzzvil, and focusing on Ad Creative optimization, this is an entirely new perspective that could open to ground-breaking innovations someday.

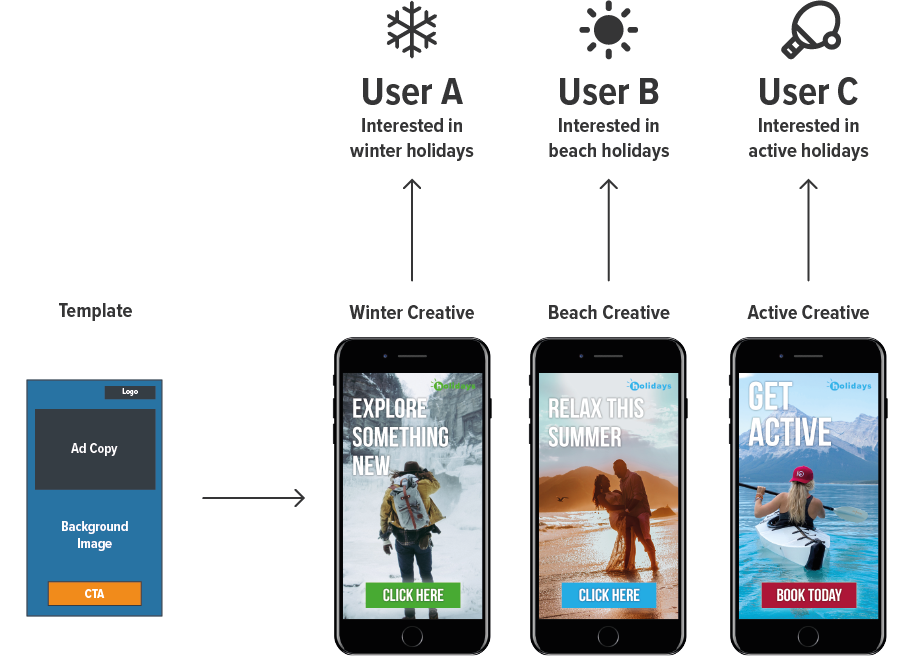

AdTech companies usually invest a lot in Ad creative optimizations to increase performance. Basically, this is about showing different creatives to different people. The differences can be various and are based on what we know of user preferences. It can alter the ad item itself, showing a particular product from a large inventory. That’s the 101 of Ad Creative optimization. Moving forward, focusing on a particular product or service. the user’s interest, location, age, and gender, can fill out the different parts of a predetermined template. This is good but also limited by the template logic applied. The result is alright, but we can easily say that there is room for improvement.

An example given by warroominc.com

An example given by warroominc.com

In this case, the ad server could actually generate the prompts instead of us, based on the keywords attached to each user profile. And the AI could generate the creatives. Of course, we would need this AI to be specifically trained for this kind of marketing creatives, fed with the billions of ad campaigns that surround us all in our daily lives.

With the rise of Zero party data, providing less creepy, more accurate information about customers, we could imagine pretty sharp creative making and advanced prompts.

버즈빌은 2023년 한 해 동안 월간 약 1.2억, 연 기준으로 14억에 달하는 AWS 비용을 절약하였습니다. 그 경험과 팁을 여러 차례에 걸쳐 공유합니다. AWS 비용 최적화 Part 1: 버즈빌은 어떻게 월 1억 이상의 AWS 비용을 절약할 수 있었을까 (준비중) …

Read Article들어가며 안녕하세요, 버즈빌 데이터 엔지니어 Abel 입니다. 이번 포스팅에서는 데이터 파이프라인 CI 테스트에 소요되는 시간을 어떻게 7분대에서 3분대로 개선하였는지에 대해 소개하려 합니다. 배경 이전에 버즈빌의 데이터 플랫폼 팀에서 ‘셀프 서빙 데이터 …

Read Article